Customer onboarding is one of the most crucial components of an organization’s customer success. Good quality and speedy customer onboarding build a good first impression, fostering long-term relationships and boosting product/service adoption.

However, when this process itself gets compromised by fraudulent activities such as deepfakes, it significantly impacts the companies’ or financial institutions’ credibility, damaging customer trust and experience.

Deepfake scams use Artificial Intelligence (AI) to generate high-realistic images, audio, and videos to mimic real-life and genuine individuals and manipulate processes like customer onboarding, and KYC automation.

While major banks and businesses rely on secure biometric authentication processes such as identity verification, facial recognition and iris scanning for their onboarding processes, deepfake scammers exploit these systems to gain unauthorized access for malicious purposes.

Deepfake incidents increased a whopping 700% in 2023 in the FinTech industry, making it imperative for banks to take steps to curb these risks.

This article explores deepfake scams in detail and how you can prevent them in the customer onboarding process.

What Is a Deepfake and How Does It Work?

A deepfake attack is a sophisticated version of digital impersonation using advanced AI and ML algorithms. While it was initially developed for research and entertainment purposes, it was exploded by scammers and fraudsters.

One of the earliest and most famous examples of deepfake is former U.S. President Barrack Obama’s speech. The original video was developed by the actor Jordan Peele and BuzzFeed, which was used by fraudsters to superimpose Peele’s face and jaw with Obama’s, making Peele’s mouth motions match with Obama’s as if Obama is the one delivering the speech.

To make the video super realistic, fraudsters used Fake apps to improve its quality, which required automated processing of almost 50 hours.

Deepfake scams typically involve training Deep Learning models on specific target individuals, allowing Artificial Intelligence algorithms to grasp and mimic the individual’s minute details, movements, and facial expressions.

The deepfake generation process leverages advanced and sophisticated AI techniques, such as convolutional neural networks, to identify and replicate facial artifacts and socio-temporal inconsistencies, generating realistic images and videos.

How Does Deepfake Scams Impact Customer Onboarding?

Audio and video-based digital onboarding processes are especially vulnerable to deepfake scams due to their ability to create real or synthetic impersonations of authentic customers, tricking the identity verification systems.

Fraudsters often exploit the available data and use AI and generative AI capabilities to fabricate synthetic biometric data, including voice manipulation and facial expressions and features. This enables fraudsters to gain unauthorized access to user accounts, devices, sensitive and confidential information, and secure resources.

In customer onboarding, deepfake scams manipulate customer identity documents, such as government-issued IDs to create realistic but fraudulent identities.

This can significantly impact customer onboarding by increasing fraud risks, eroding trust between banks and customers, compliance challenges and legal penalties, delays in the onboarding processes, and an increased cost to combat the emerging deepfake threats.

Hence, deepfake scams are particularly a big threat to user onboarding processes and systems, where authenticating and verifying a user’s identity is paramount—making it imperative to employ advanced AI-based fraud detection system.

Deepfake Techniques Used By Scammers For Unauthorized Onboarding

Deepfake scams enable and leverage several types of banking fraud and techniques in the user onboarding process to gain unauthorized access to user accounts. Some of those techniques include:

1. Synthetic Identity Theft

Creating an entirely new and synthetic identity is a primary technique fraudsters use to conduct a deepfake scam during customer onboarding. Fraudsters alter users' critical details in identity documents, such as passports, Aadhar cards, driving licenses, or other government-issued IDs, and even deepfake the users’ images in the documents.

Thus, fraudsters create entirely new or synthetic identities combining real and fake information, making it difficult to determine their authenticity and distinguish between real and fake documents.

This sophisticated technique poses a significant threat to the identity verification system, making deepfake a great challenge for banks and financial institutions.

2. Social Engineering Scams

Another popular technique fraudsters use is social engineering, which involves manipulating users into providing their user credentials to fake websites and logins or exploiting their current sessions through unauthorized access.

In this scam, scammers either create an entirely new fake website to redirect users to or create chatbots or mimic real and genuine authorities’ conversations—deceiving users into compromising their credentials or providing unauthorized access to the scammers.

3. Phishing Scams And Imitating Important People

This scam typically targets producing audio and video clips mimicking higher authorities and people like a company’s CEO or a high-level security manager.

This is one of the ever-growing and popular types of deepfake scams where, by exploiting a higher authority’s identity, fraudsters can direct subordinates and lower-level employees to either conduct hefty transactions, share critical or confidential files, or share access to private or sensitive systems.

One such recent phishing scam is the one where a finance worker paid a whopping $25 million to fraudsters who posed to be the CFO of Hong Kong’s multi-national corporation.

4. Fraudulent Communications

Fraudsters leverage deepfake audio and video communications by spoofing phone numbers or email addresses, making it seem as if the communications appear from legitimate sources.

Fraudsters often use these deceiving communications during the user onboarding process to pass through identity verification, validate false identities, or even access sensitive information.

5. Voice Cloning

Voice cloning allows scammers to mimic and impersonate an authentic individual’s voice using deepfake technology with high accuracy.

By combining deepfake and voice synthesis tools, fraudsters try to gain access to user accounts by breaking authentication systems requiring voice recognition, making it easier to gain unauthorized access and conduct malicious activities, such as fraudulent transactions.

Besides, scammers can also use cloned voices to generate custom and tailored messages, making it easier to deceive the targets and authentication and verification systems.

One of the recent incidents of deepfake voice cloning is when fraudsters voice cloned Mark Read, the CEO of WPP as well as set a Microsoft Teams meeting with Read using his image and another WPP executive, asking them to set up a new business in an attempt to gain personal details and money.

However, fortunately, the scam wasn’t successful due to the vigilance of the company’s executives, preventing the incident and fraudulent attempt of scammers.

Tools Used By Scammers To Conduct Deepfake Attacks

Scammers use a variety of sophisticated tools to conduct deepfake attacks and deceive identity verification systems:

- Audio and video deepfakes: These involve using AI to synthesize and manipulate users’ voices and create high-realistic videos for audio and video verification during the user onboarding process to make the system believe that it’s a genuine user who’s trying to log in. These tools help scammers bypass authentication systems, gaining access to sensitive data and information.

- Realistic chatbots: Scammers leverage sophisticated chatbots and virtual assistants powered by Natural Language Processing (NLP) to emulate human interactions by impersonating genuine users and individuals. They also impersonate customer service representatives to lead users through the user onboarding process and gather sensitive information.

- TTS and STT Tools: Text-to-speech and Speech-to-Text systems are used by scammers to convert text into spoken voice and spoken words into text, respectively and combine with the deepfake technology to automate phishing attempts for onboarding processes requiring voice recognition or creating convincing phone interactions.

- Face-swapping tools: These tools use deep learning techniques and capabilities to replace a person’s face with another in videos and images, creating fake identification images or documents to deceive identity verification systems. Moreover, face swapping can also be used to impersonate individuals in video calls in real-time, making it challenging for banks and organizations to distinguish legitimate users from impersonators.

How To Prevent Deepfake Scams In User Onboarding?

Global research indicates that the deepfake detection market is expected to expand at a 42% CAGR from $3.86 billion in 2022 until 2026.

Hence, banks and global organizations are majorly investing in advanced technologies to prevent fraud such as deepfakes and its risks. Here are a few ways you can prevent deepfake scams in customer onboarding:

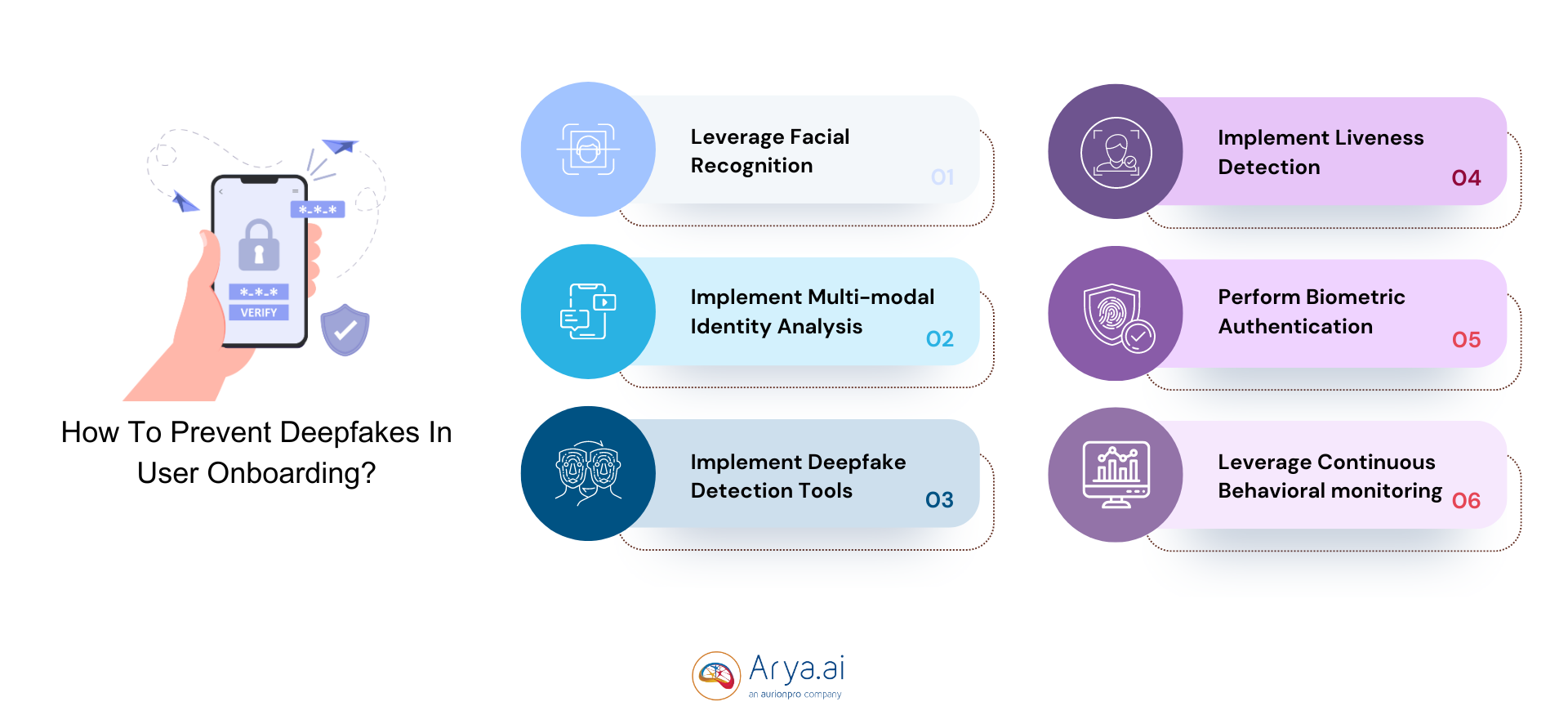

1. Leverage Facial Recognition

Facial recognition techniques are key in detecting AI frauds and deepfake scams, especially in real-time transactions and onboarding. They focus on analyzing the user’s facial expressions and gestures to detect unusual patterns or anomalies.

They also analyze lip sync synchronization to determine whether it’s a legitimate user or a tampered or impersonated video. Thus, with micro-expression analysis and detection, facial recognition in deepfake detection goes a long way in identifying and preventing these threats from hampering the company’s reputation.

2. Implement Multi-modal Identity Analysis

The multi-modal analysis approach in identity verification combines multiple detection approaches to simultaneously analyze the user’s behavior, facial expressions, and more.

The different approaches used in the analysis are:

- Facial recognition capabilities try to match the user’s facial expressions and patterns with saved database images to detect inconsistencies or match identities.

- Biometric authentication uses features unique to the user, including iris scanning, fingerprint scanning, etc.

- Document identity verification checks the user’s unique government-issued IDs, such as passports, Aadhar cards, PAN cards, driving licenses, etc., to confirm their identities. Intelligent document processing simplifies the extraction and verification of large data sets, streamlining the process for more efficient and accurate document verification.

According to a Gartner report, 30% of organizations will consider the use of identity verification and authentication solutions in isolation by 2026 due to the growing concerns of deepfakes—making the multi-modal analysis approach a key solution for the future to overcome deepfake attacks.

Thus, combining different allows banks and financial organizations to create a much more secure identity verification and onboarding system, reducing deepfake risks.

3. Implement Robust Deepfake Detection Tools

Using advanced deepfake detection technologies that analyze user’s biometric features, identity document frauds, transactional history, and liveness indicators is essential to prevent deepfake risks.

These tools are pivotal in the user onboarding process, bringing multiple benefits such as high accuracy, efficiency, and scalability. They can handle a large volume of data and adapt to become more proficient and accurate over time.

However, it is important to choose deepfake detection tools that evolve with time; hence, regularly updating the algorithms and expanding the training datasets by providing diverse and new information into the AI and ML models is critical to boost the tools’ deepfake detection capabilities.

4. Implement Liveness Detection

Liveness detection is critical in the customer onboarding process, as it distinguishes spoofed or fabricated images and videos from legitimate ones.

Liveness detection checks verify the presence of the person during the onboarding process, catching features like their facial actions, random eye motions, etc., which are otherwise difficult to detect for a deepfake image or video.

There are two types of liveness detection systems:

- Active liveness detection: This liveness detection method involves the system asking the user to perform certain specific actions, such as smiling, blinking their eyes, or turning their heads, to ensure that the person is actually present for the onboarding process, and it’s not just a static or spoofed image or video.

- Passive liveness detection: This method involves using software to analyze the user’s natural human traits, such as micro facial expressions, eye movements, and changes in facial expressions, that are difficult to convincingly replicate for a deepfake.

Thus, liveness detection significantly facilitates and strengthens the customer onboarding process, eliminating deepfake risks and threats.

5. Perform Biometric Authentication For High-risk Profiles

Biometric authentication factors are much more difficult to replicate. For instance, while iris scanning analyzes the eye’s intricate parts and details, including the eye color, fingerprint scanning measures the unique lines, valleys, and ridges on the user’s fingertips to ensure accurate and stringent user onboarding verification.

These biometric authentication features make it challenging for scammers to replicate intricate details of the user’s unique body parts, combatting deepfake threats and scams.

6. Leverage Continuous Behavioral Monitoring

Banks and financial institutions are increasingly leaning towards adopting behavioral analytics solutions into their AI and ML processes.

By analyzing the user’s usual or typical login location or devices, banks can monitor and identify suspicious login attempts from unfamiliar devices or distant geographical locations, making it easier to identify impersonators and deepfake scams.

Thus, by combining biometric authentication, OTP, and other verification technologies with continuous behavioral analysis, banks and financial institutions can identify anomalies and red flags and prevent deepfake risks.

How Can Arya AI Help Combat Deepfakes?

The battle against combatting deepfakes is dynamic and hence requires a vigilant and rigorous approach that efficiently detects deepfake threats and prevents them. At Arya AI, we offer secure and easy-to-integrate APIs to facilitate your user onboarding process and prevent cybersecurity risks such as deepfakes.

Here are some of the powerful APIs that you can integrate within your existing systems:

- The Deepfake Detection App uses advanced AI algorithms and deep learning capabilities to identify and detect synthetic deepfake images, audio, and videos in real time with high accuracy by distinguishing manipulated media from genuine ones.

- The Passive Face Liveness Detection module works as an anti-spoofing technology by distinguishing between a live image and a spoofed 2D or 3D printed user image, preventing presentation and deepfake attacks, and verifying whether the user is present and live during the identity verification and onboarding process.

- The Face Verification App recognizes and matches the user’s face from several sources, such as surveillance footage, images, or documents, verifying the user’s authenticity accurately in less than a minute.

- KYC Extraction streamlines the customer onboarding process by automating identity verification and compliance checks. Integrating seamlessly with face verification it ensures accurate, secure, and efficient customer authentication.

You can check out other critical and robust APIs we provide here.

Wrapping Up

Deepfake is a growing concern for organizations and financial institutions across the world. With fraudsters continually exploiting sophisticated AI techniques and refining their strategies, banks and global organizations need to become more vigilant to stay ahead of the fraudsters and prevent risks of deepfakes and other emerging attacks.

Deepfakes especially pose a greater threat to banks and financial organizations in their user onboarding processes, as scammers can exploit genuine users’ identities in multiple ways, which can be avoided with advanced deepfake detection technologies.

So, besides taking the preventative strategies mentioned above to combat deepfakes, make sure to also check out and opt for our powerful APIs that help proactively identify deepfake scams and maintain customer trust and brand credibility.

Want to fight deepfake scams with Arya AI? Contact us today to learn more!