Discerning what’s real and what’s fake on the internet is not easy anymore. Fraudsters are using new and emerging measures, such as deepfakes and AI-generated images, to exploit vulnerabilities and break into critical systems.

Deepfake scams have caused a wild uproar among key industries like BFSI where fraudsters deepfake customers’ images in an attempt to hack into their accounts. This makes AI-generated images a major concern for customer onboarding and identification systems.

Cybercriminals are always finding innovative ways to break into the most robust and sophisticated security solutions, making it imperative to find systems that help detect AI-generated selfies and images and prevent unauthorized login access.

In this article, we’ll see how AI-generated selfies and images are significant threats to businesses and financial institutions, and how they can be detected before causing any damage.

What are AI-generated Selfies?

As the name suggests, AI-generated selfies are fake or synthetic selfies or images created using Artificial Intelligence. Fraudsters often leverage AI selfie generators and tools to create these selfies.

Technologies like Generative AI have made it so easy and convenient to create synthetic selfies and images that, on average, people create 34 million AI images per day.

The AI selfie generators use Generative Adversarial Networks (GANs), which include two AI models. One AI model, ‘generator,’ generates/creates the AI images, while the other AI model, ‘discriminator,’ evaluates these image’s authenticity and genuineness.

The user can provide as much feedback and changes required to the image as possible through the discriminator, enabling the generator to refine the image until it satisfies the criteria continuously.

As this technology is evolving and improving to create extremely realistic and fake images, it poses a major threat to banks and financial institutions., As cybercriminals can use AI-generated images to crack the customer log in easily, erecting sophisticated mechanisms is crucial for processes like KYC.

Why are AI-generated Selfies a Threat to Organizations?

As mentioned earlier, AI-generated selfies and images pose a significant threat to organizations because of their potential use cases in unscrupulous activities.

Through hyper-realistic AI images, fraudsters can steal a genuine user’s or customer’s identity to trick facial recognition systems in the customer onboarding/login process and bypass these processes to take over customer accounts.

Here is a rundown of why AI-generated selfies are considered a huge threat to banks and financial institutions:

- AI-generated selfies and images complicate the regulatory processes, such as Know Your Customer (KYC), leading to non-compliance with such strict regulations, resulting in legal sanctions and penalties.

- By using AI-generated selfies to create convincing fake profiles on emails, social media, and messaging platforms, fraudsters can trick employees and customers into transferring funds and stealing sensitive information.

- By impersonating high-ranking officials and executives, such as CEOs, fraudsters can create and spread fake news, leading to damaged business reputation, loss of customer trust, and legal challenges.

- Another way fraudsters can damage a company’s image is by impersonating an employee, customer, or even customer service representative to spread misleading information.

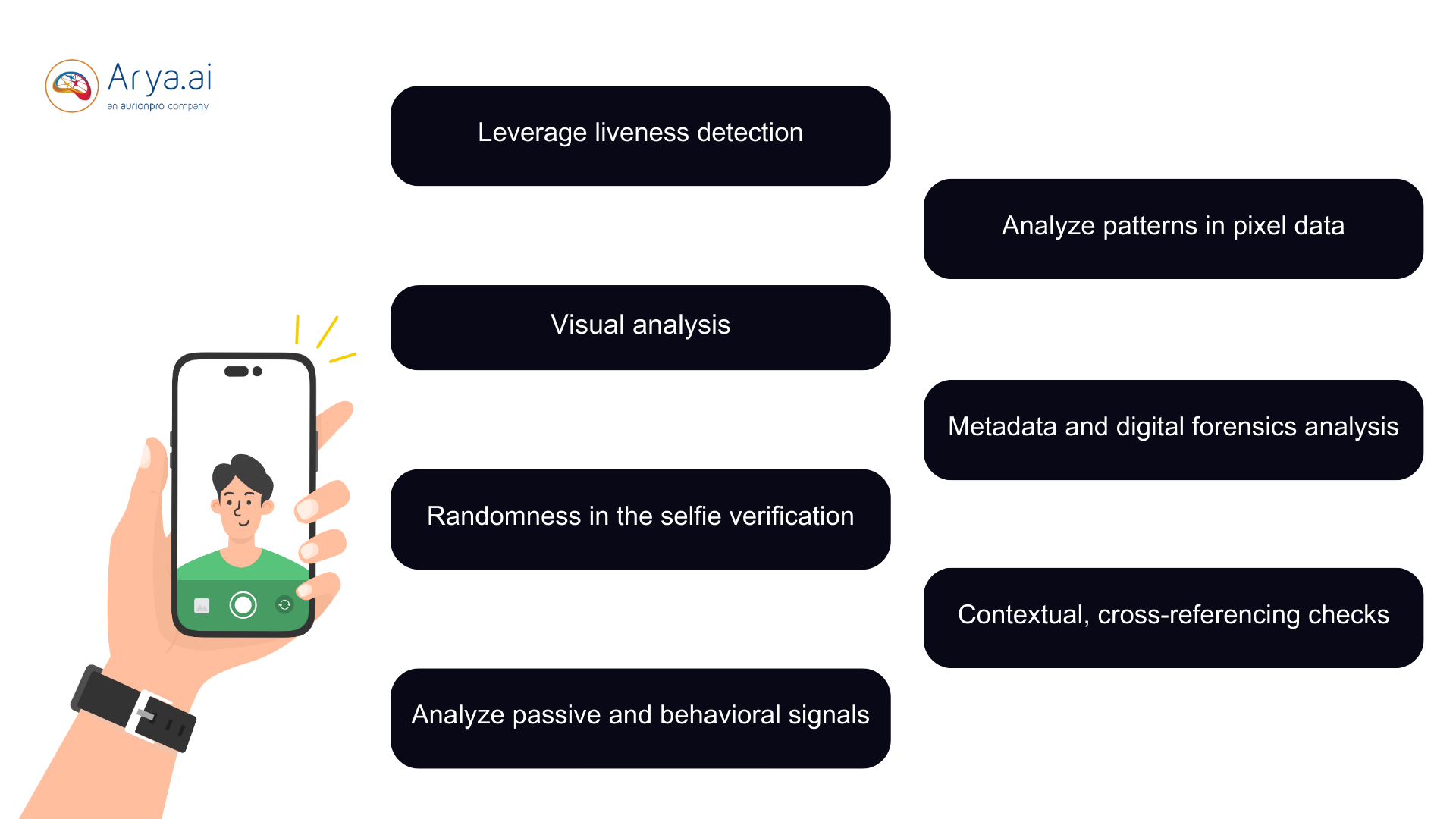

Top Ways To Detect AI-Generated Selfies

AI-generated selfies and images can be detected in several ways and are of the utmost paramount importance to prevent financial and reputational risks. Here are a few ways to combat AI-generated selfies-

1. Leverage liveness detection

Liveness detection is usually used in biometric verification to ensure the user’s presence during the onboarding and verification process. This helps ensure that the selfie is being taken by a real and live person and not generated synthetically by AI.

The process typically involves using techniques that require users to perform specific actions, such as eye blinking, turning their heads, smiling, etc., to differentiate between AI-generated and real human selfies.

Combining liveness detection with other biometric factors, such as fingerprint scanning and voice recognition, makes it significantly challenging for fraudsters to pass through the verification system.

2. Visual analysis

Visual analysis is one of the most direct and straightforward ways to detect AI-generated selfies. Experts analyze the images to identify the uncanny visual characteristics.

With AI struggling to render natural human-like features into selfies, it becomes easier to identify anomalies and features, such as skin textures, patterns, inconsistent lighting, and background abnormalities. However, as the images are getting more sophisticated and realistic, visual analysis is not a reliable mode of detection.

3. Include randomness in the selfie verification process

A selfie verification process with a simple straight-on selfie of a customer is much easier to create and predict—making it easy for fraudsters to pass through the verification process without much effort using an AI-generated selfie.

Hence, bringing a mix of random elements to the verification process, such as adding a pose to the verification step which keeps changing is important to include randomness within the step and minimize the chances of fraudulent and unauthorized login attempts.

The more random and different poses are introduced within the verification process, the more difficult and longer it will take fraudsters to replicate the poses and bypass the selfie verification process.

4. Analyze passive and behavioral signals

Collecting risk signals over a period of time and analyzing them gives a better chance at tailoring the verification process and identifying risk signals much quicker to prevent fraudulent login attempts.

Hence, collecting and analyzing passive and behavioral risk signals is important.

- Passive signals are provided by the customer’s or user’s device when they attempt to log in. These signals include the user’s device’s IP address, VPN detection, browser fingerprint, location, device fingerprint, etc. Risks associated with these signals or deviations from them facilitate detecting anomalies or unauthorized login attempts.

- Behavioral signals typically denote the user’s behavior, such as keystrokes, mouse clicks, distraction, hesitation in clicking a selfie, etc., which ultimately makes it easier to differentiate between an authentic user and a chatbot or AI-generated selfie.

Analyzing these signals allows banks and financial institutions to know and understand their customers truly. Any deviations from their normal user behavior can be a sign of AI fraud and synthetic identity.

5. Analyze patterns in pixel data

High pixelation, unnatural skin smoothing, and other abnormalities around the selfie’s facial features are common signs of synthetic and AI-generated selfies.

AI-generated selfies often have high pixelation for several reasons, such as low resolution, rendering artifacts like patchy or degraded areas in the image, post-processing techniques, such as sharpening or upscaling images, which introduce pixels, etc.

Using advanced deepfake detection tools can help identify patterns and anomalies in images, which are signs of AI-generated selfies.

6. Metadata and digital forensics analysis

An image’s metadata, such as EXIF (Exchangeable Image File Format), contains technical information about the image, such as the camera and lens used, settings, time and date the image was taken, etc. It provides clues about the image’s origin and is typically absent in AI-generated images.

You can use different forensics tools to track the origin of an image or selfie and analyze its digital footprint. In case you’re unable to track an image’s origin to its original source and upon inspection you find missing metadata or inconsistent or unusual data fields, it could be a sign of AI-generated selfies and images.

7. Contextual and cross-referencing checks

Cross-checking and referencing the selfies users upload with publicly available images on social media platforms can provide better context about the selfie’s authenticity and reveal discrepancies, if any, in the AI-generated image.

For instance, if an AI-generated selfie matches one of the images on the user’s social media platform posted a year ago, it indicates a discrepancy and sign of an AI-generated selfie.

Detect AI-generated Selfies With Arya AI

The AI revolution is solving numerous problems for enterprises, but miscreants using the technology for unscrupulous means poses a threat.

Realizing this importance, at Arya AI, we offer reliable and robust Apps that help identify real and authentic images and selfies from AI-generated ones.

- Arya’s Deepfake Detection App leverages state-of-the-art algorithms to identify AI-generated selfies with unparalleled accuracy. Whether it's subtle imperfections in texture or inconsistencies in facial features, Arya AI’s solution scans and analyzes images to detect even the most convincingly altered photos.

- The Passive Face Liveness Detection App works as an anti-spoofing technology that distinguishes between 2D printed, 3D printed, or a user’s digital image of a user’s selfie to determine spoofed artifacts and anomalies. The module predicts whether the user is present in real-time during the capture of the selfie—identifying AI-generated selfies and preventing presentation attacks.

- The Face Verification App provides an automated solution for customer verification during user onboarding. It recognizes and matches users’ faces from multiple sources to determine their authenticity.

Our apps process images instantly, providing immediate feedback on the authenticity of a selfie. This balances operational efficiency without compromising on accuracy. This technology is essential for industries like finance, insurance, etc., where verifying identity and preventing fraud is paramount.

You can easily integrate our Apps within your existing systems without much hassle to ensure a secure and reliable environment.

Wrapping up

Generating images with AI can be done within a few clicks. The AI image generator market size is projected to grow at a staggering CAGR of 17.4%, from $299,295 in 2023 to a whopping $917,448 in 2030.

With this skyrocketing market increases the risks of deepfake and AI-generated media used for fraudulent activities. Hence, erecting robust and reliable verification systems is paramount to stop fraudsters from deceiving and manipulating verification systems.

If you want to strengthen your verification and onboarding system, try opting for our secure Arya Apps. These help detect deepfake and synthetic images, protecting your company against fraud and cybercriminal risks.

Contact us today to learn more and test out the APIs for free.